Multisensory Environments

Virtual, Mixed and Augmented Reality

Conversational Agents

Smart-Objects

Multisensory Environments

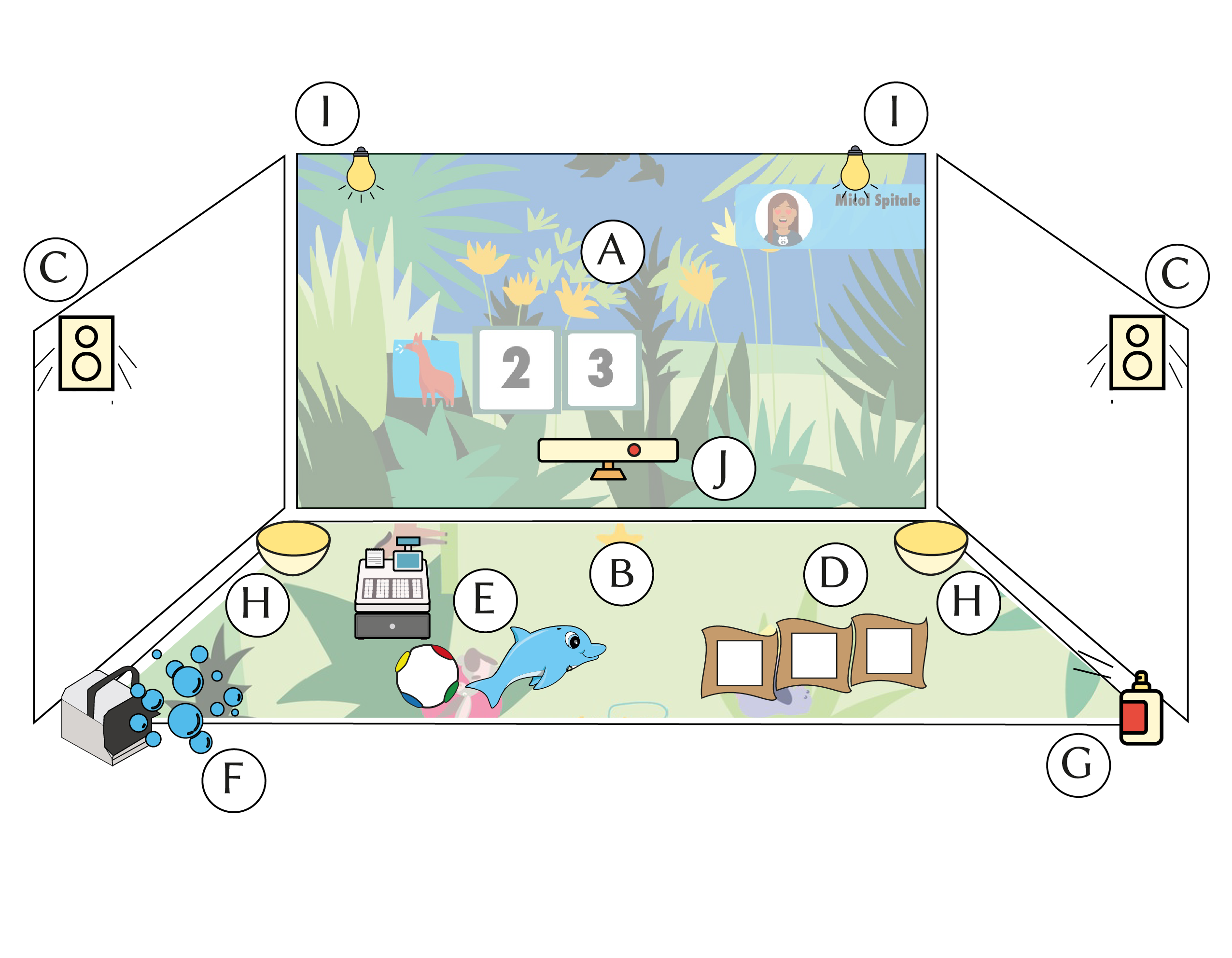

LUDOMI

Ludomi is the project awarded by Polisocial Awards 2017 in which we developed Magika. Magika is the innovative technological solution that allows to transform any room into a “smart” space in which lights, immersive projections on walls and floors, music, sounds, aromas, and physical materials are digitally controllable, programmable, and interactive. The result is a “Magic Room” where children are exposed to multisensory stimuli and can interact with physical materials and multimedia contents through movements, gestures, and manipulation of objects. Magika allows to perform playful and educational activities (individual or collective) that stimulate all senses and are engaging. Through a tablet, teachers can control and configure each activity and personalize the experience according to the specific needs of children. Magika can be controlled and configured through a tablet. Thanks to an easy and intuitive control panel, the teacher can monitor, select, and improve the activities of the room. It takes just a few steps to choose activities and to keep an eye on what’s happening. Through the tablet you can start or end the activity any time, thus teachers can manage children inside the room better. Compared to traditional multi-sensory rooms like Snoezelen, the playful-educational experiences in the Magic Room have greater potential for children with cognitive disabilities because they are more customizable, stimulating and engaging.

Virtual, Mixed and Augmented Reality

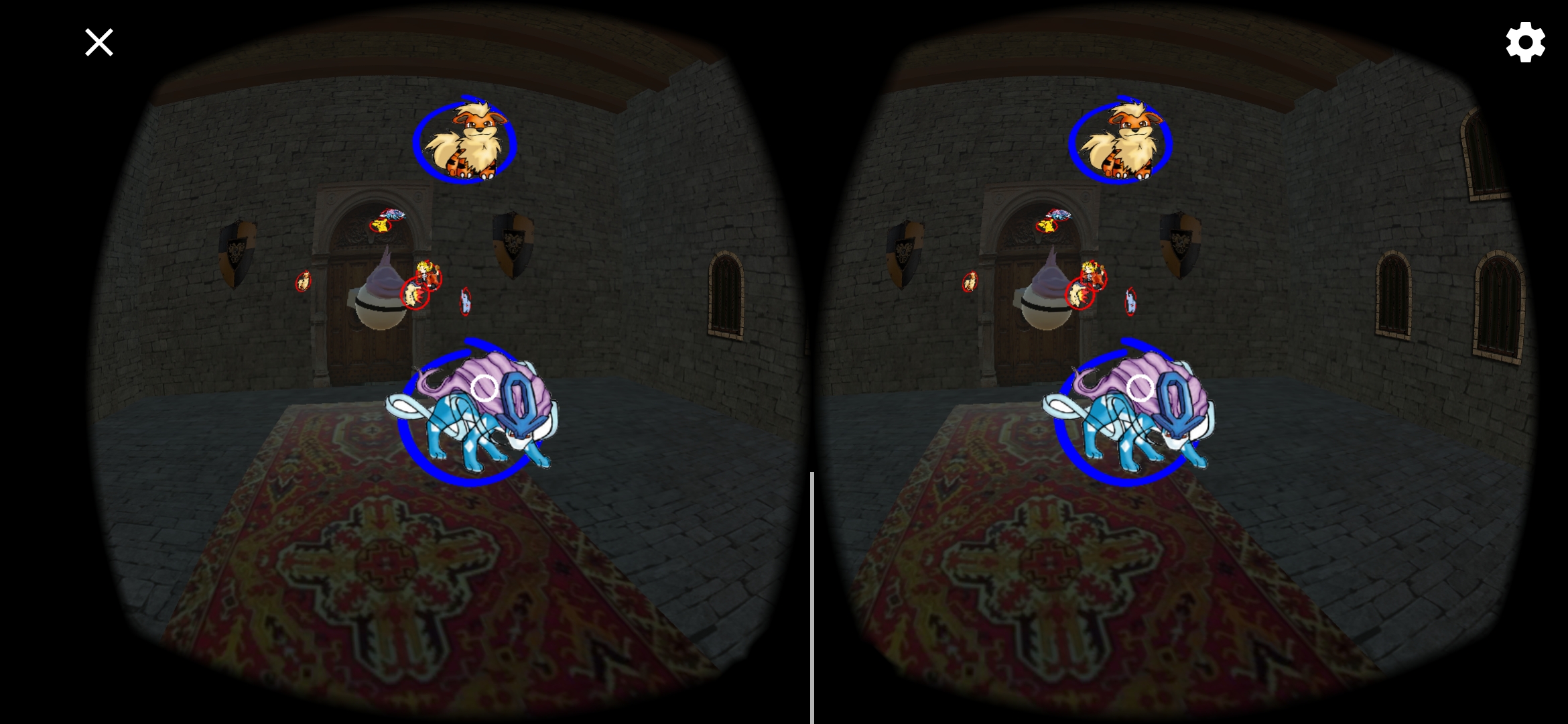

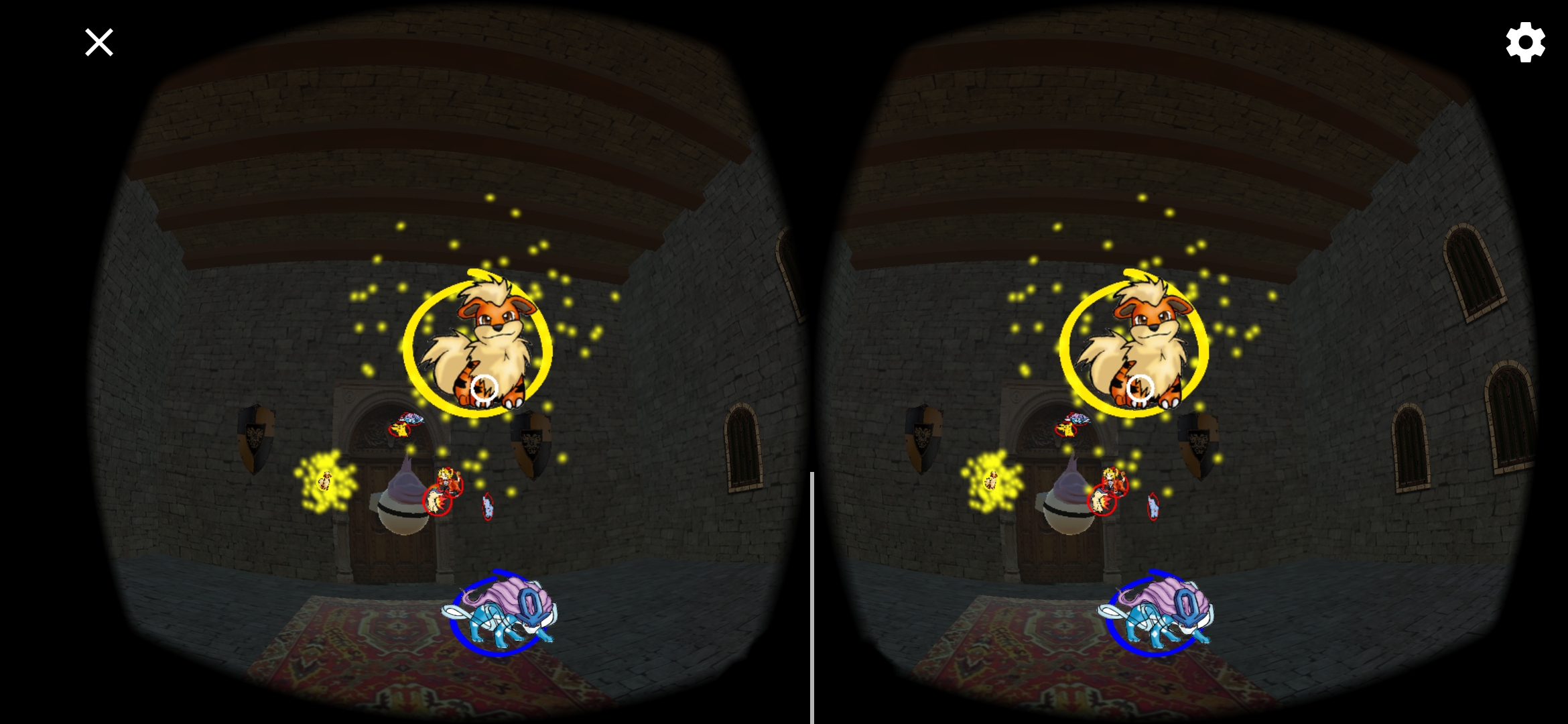

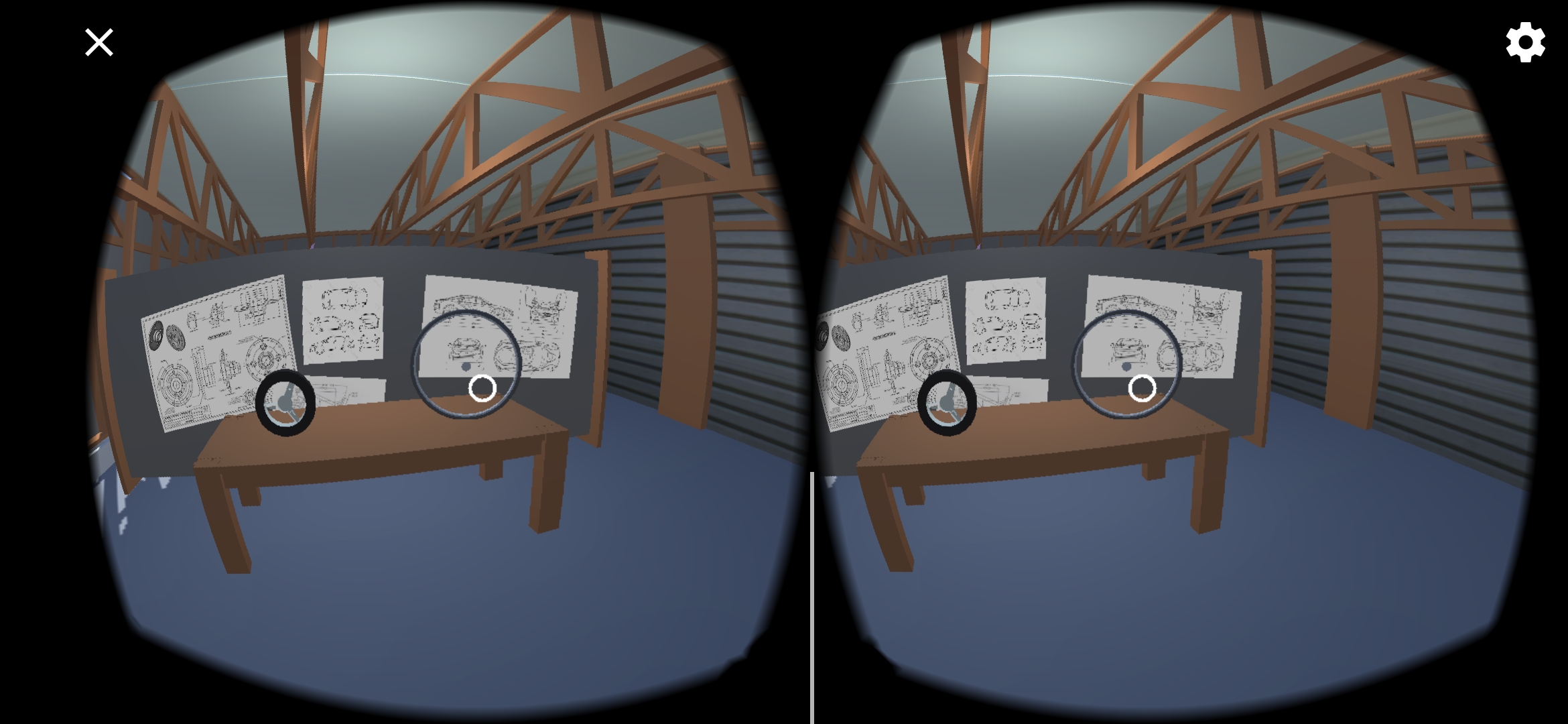

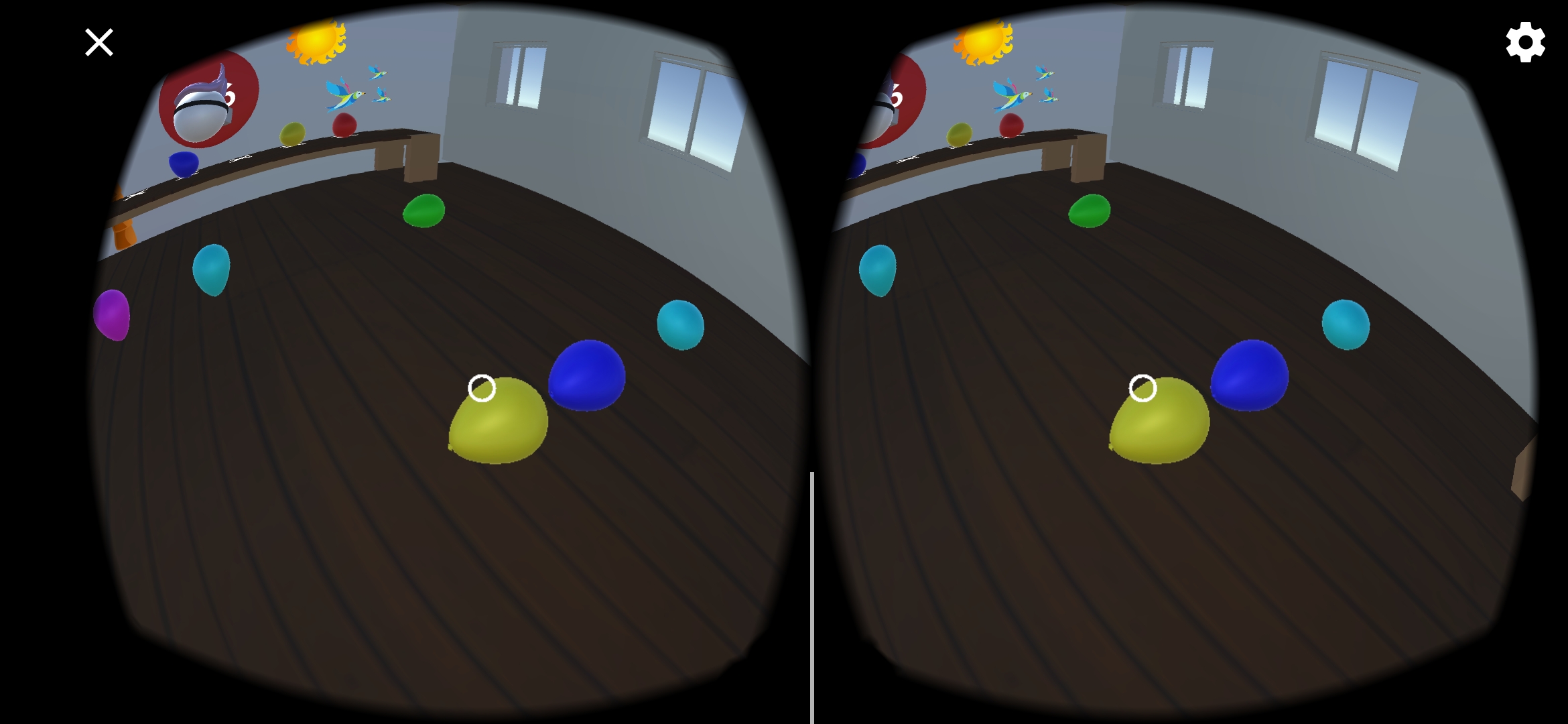

Wildcard

The purpose of this work is to collaborate with medical associations to help children with NDD to provide for themselves, reducing their dependencies on third parties. The personal and social autonomy is the desired end state to be achieved, thus enabling integration in the real world. To reach this goal the project leverages immersive virtual realities using a special viewer. This feature allows patients to stay focused on the exercise that must be performed. Such therapeutic sessions trigger a learning process aiming at a seamless understanding of the sourrounding world. This study leads to the construction of Wildcard: a framework for creation, management and storytelling of avtivities. Theese stories help children in facing everydays life’s challenges. The system has been designed in cooperation with the non-profit therapy center “L’abilità”, resulting in an intelligent and immersive platform for children with autism. This enables a cognitive maturation through a playful entertainment that becomes a life lesson.

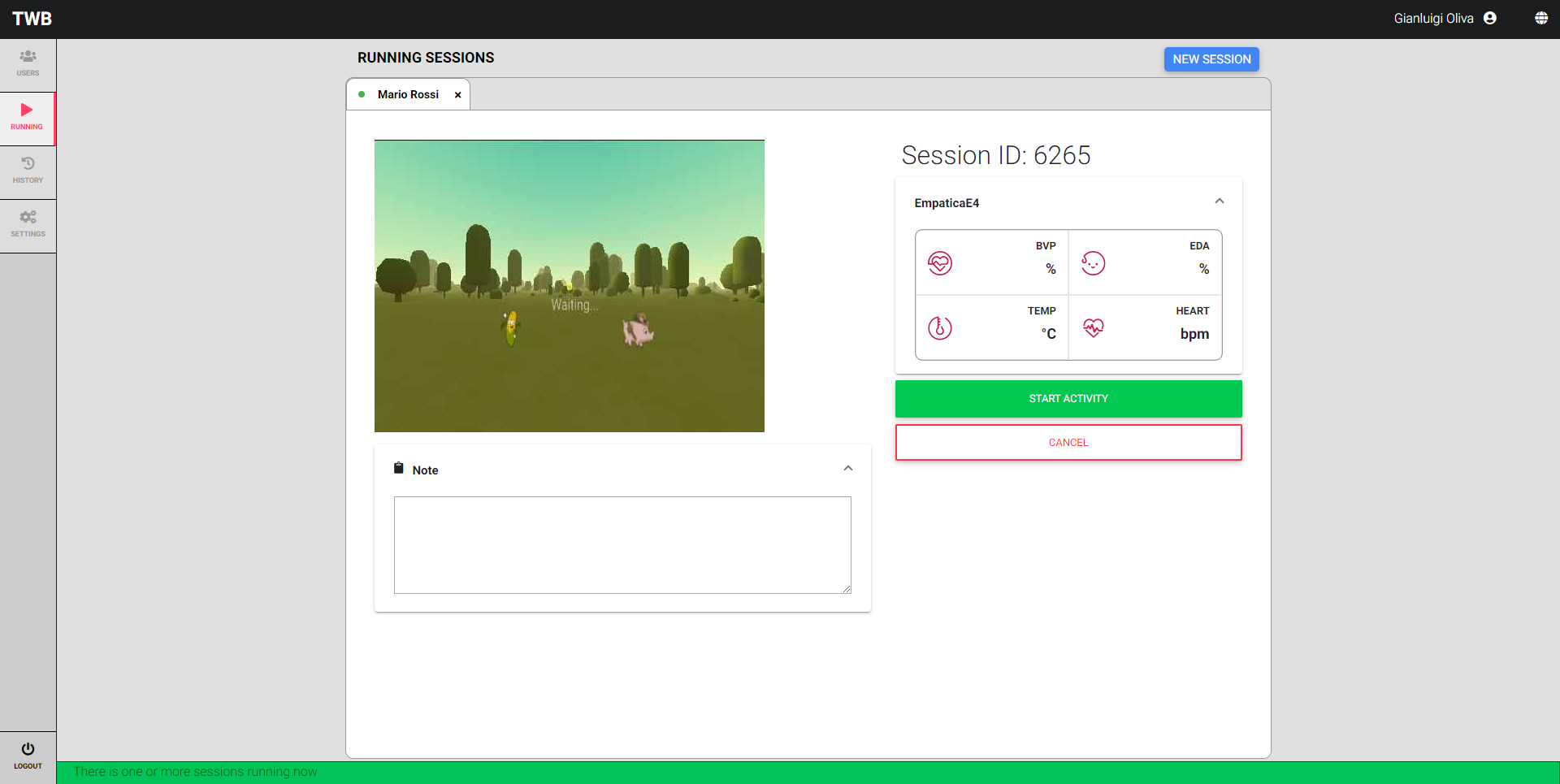

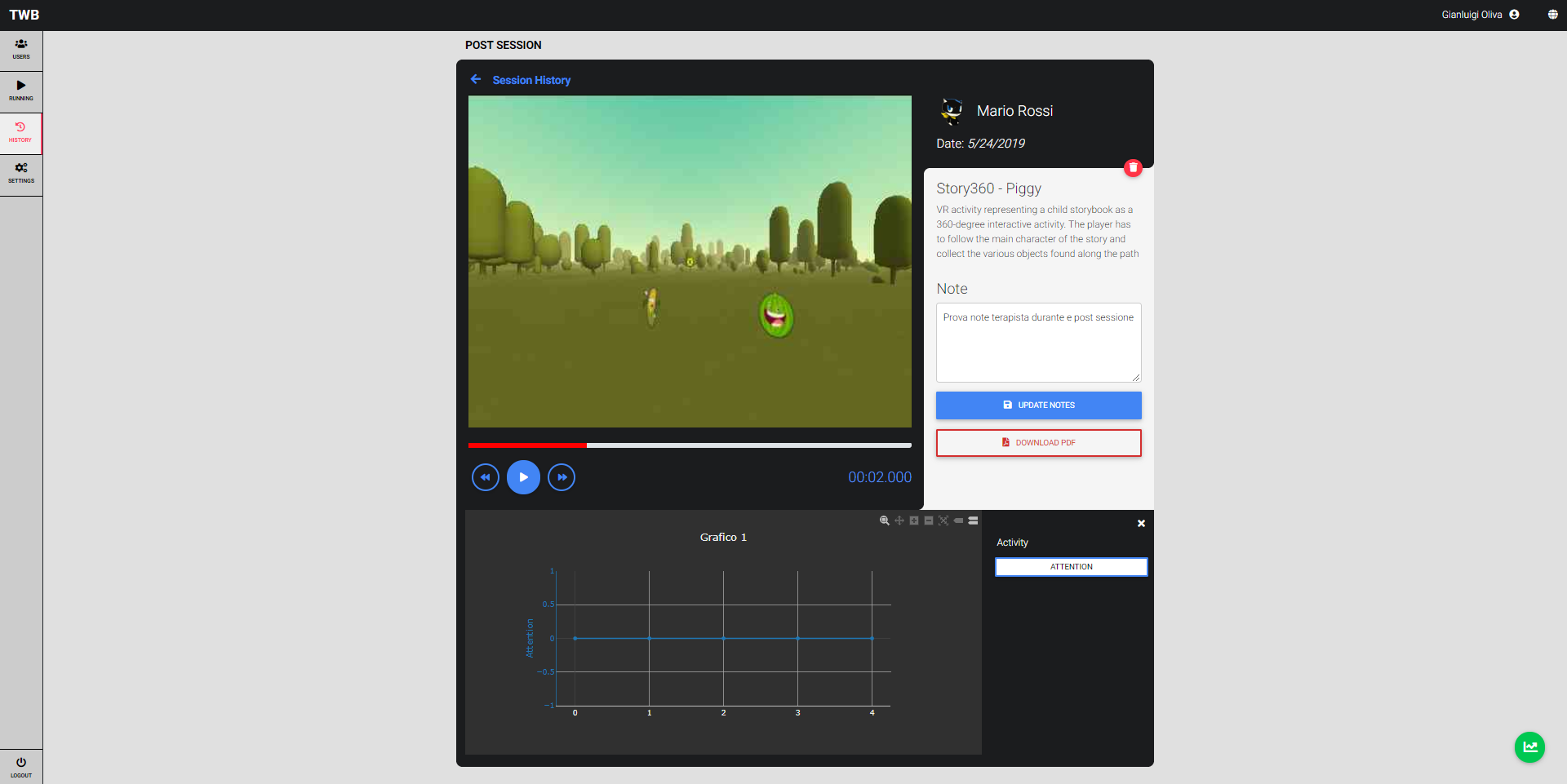

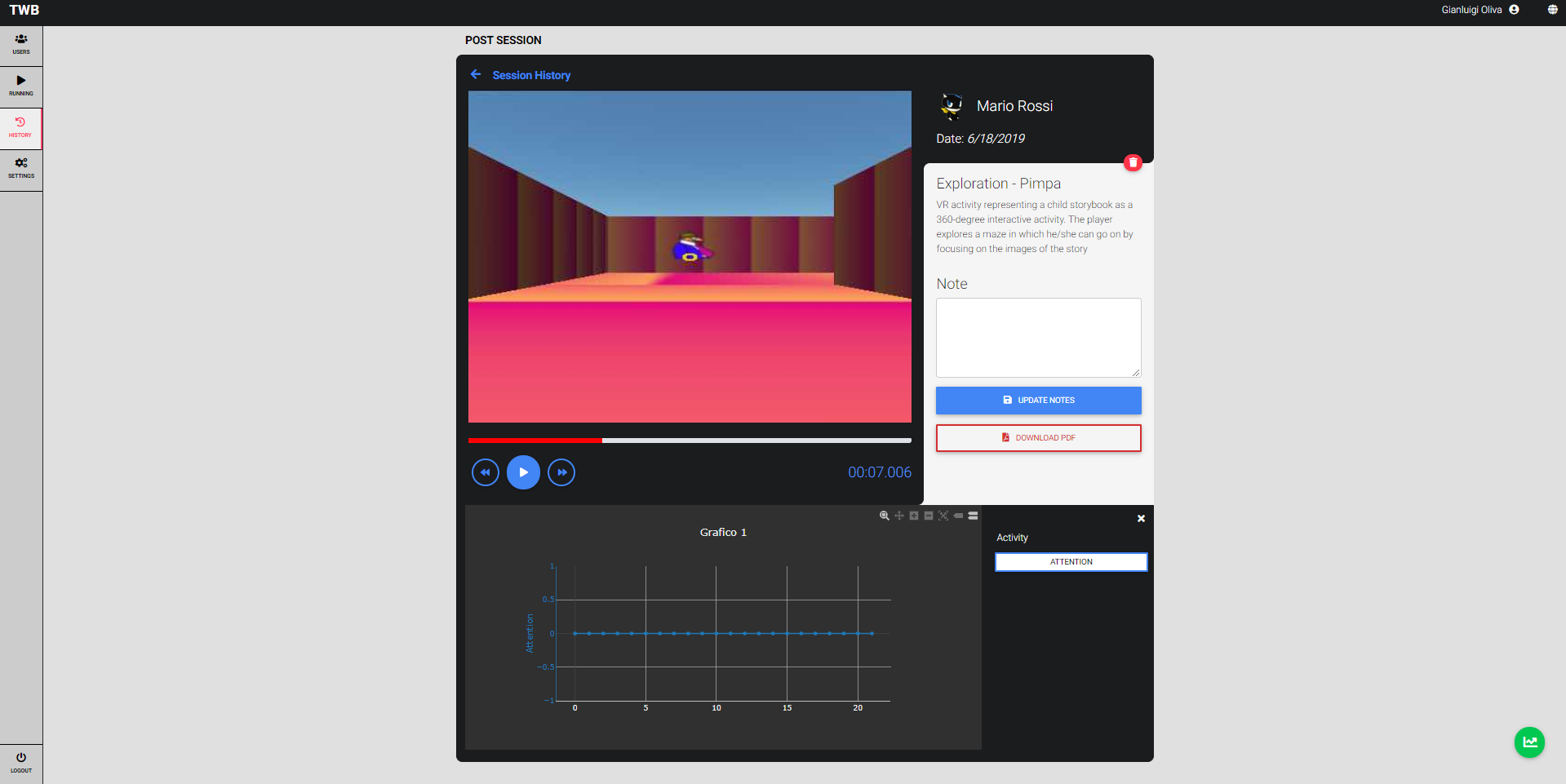

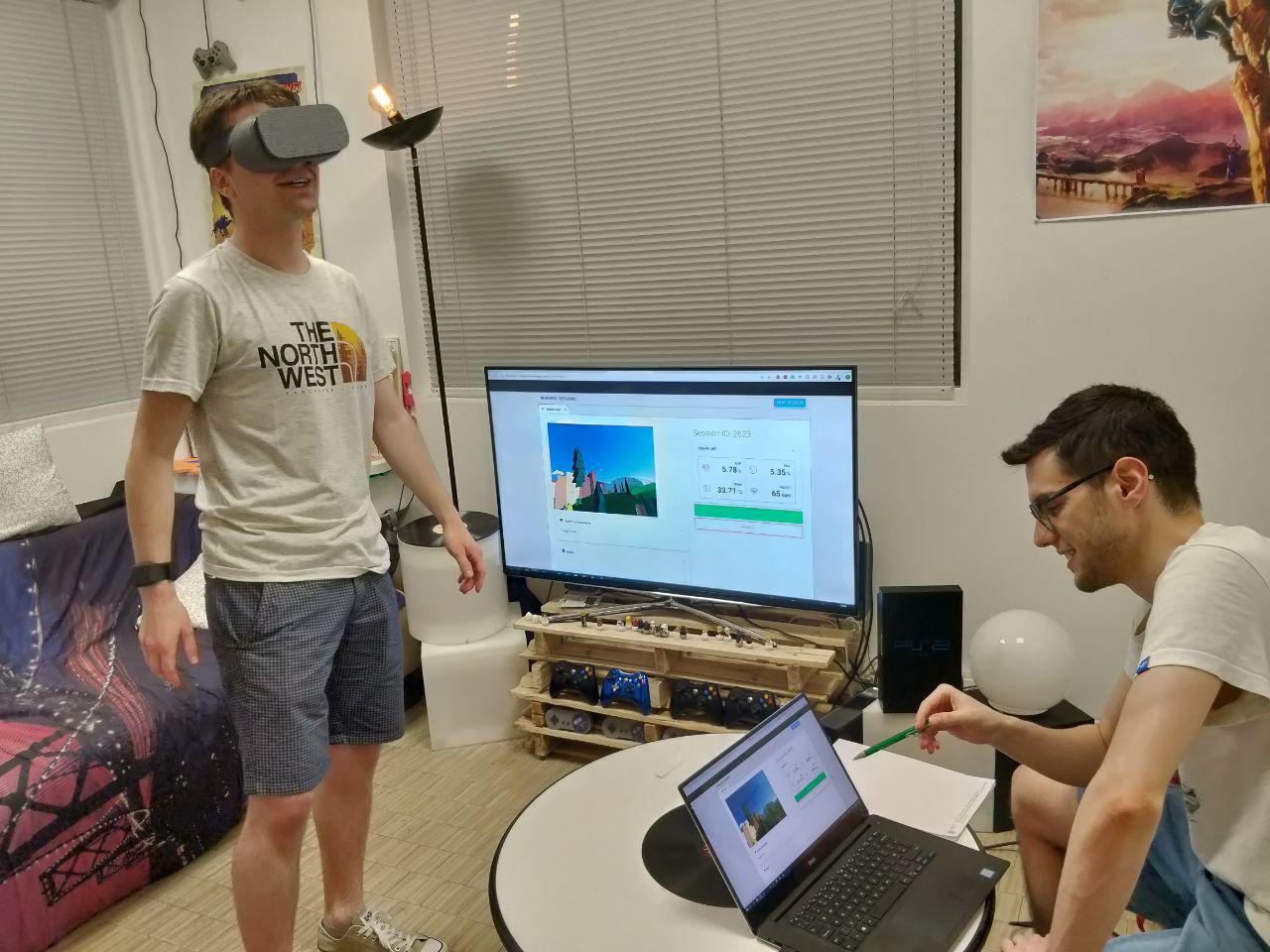

TWB

TWB is a EIT-funded project that addresses the therapeutic needs of persons with neuro-functional impairments (NFI), e.g. attention deficits or mental decline. We offer innovative services bridging wearable VR and MR apps & bio-sensing devices integrated in a customizable platform for patient care, therapy management and data analysis.

TWB services would enable novel treatments for adults with NFI to mitigate mental decline and for children with NFI to develop cognitive-behavioral skills, improving life quality for these subjects and reducing social costs.

Social MatchUP

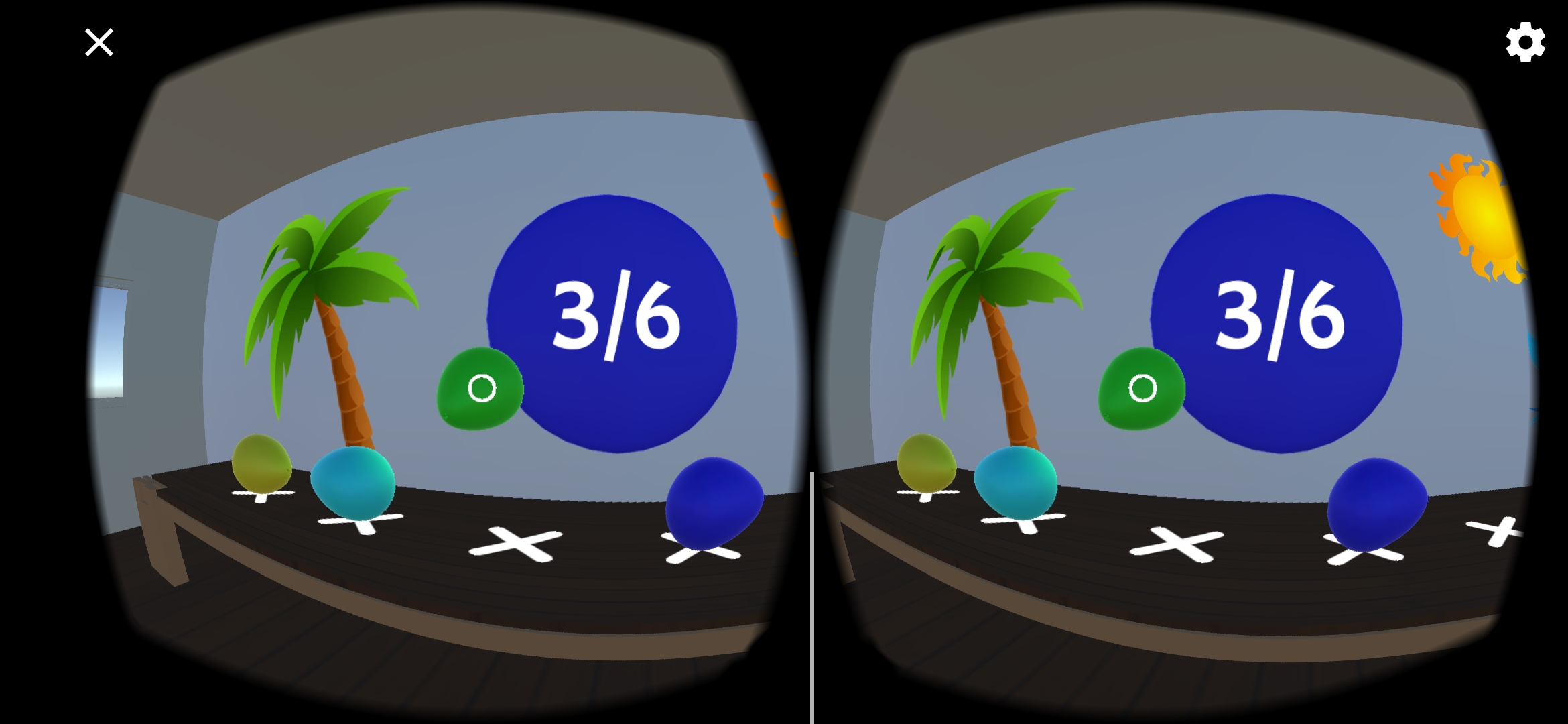

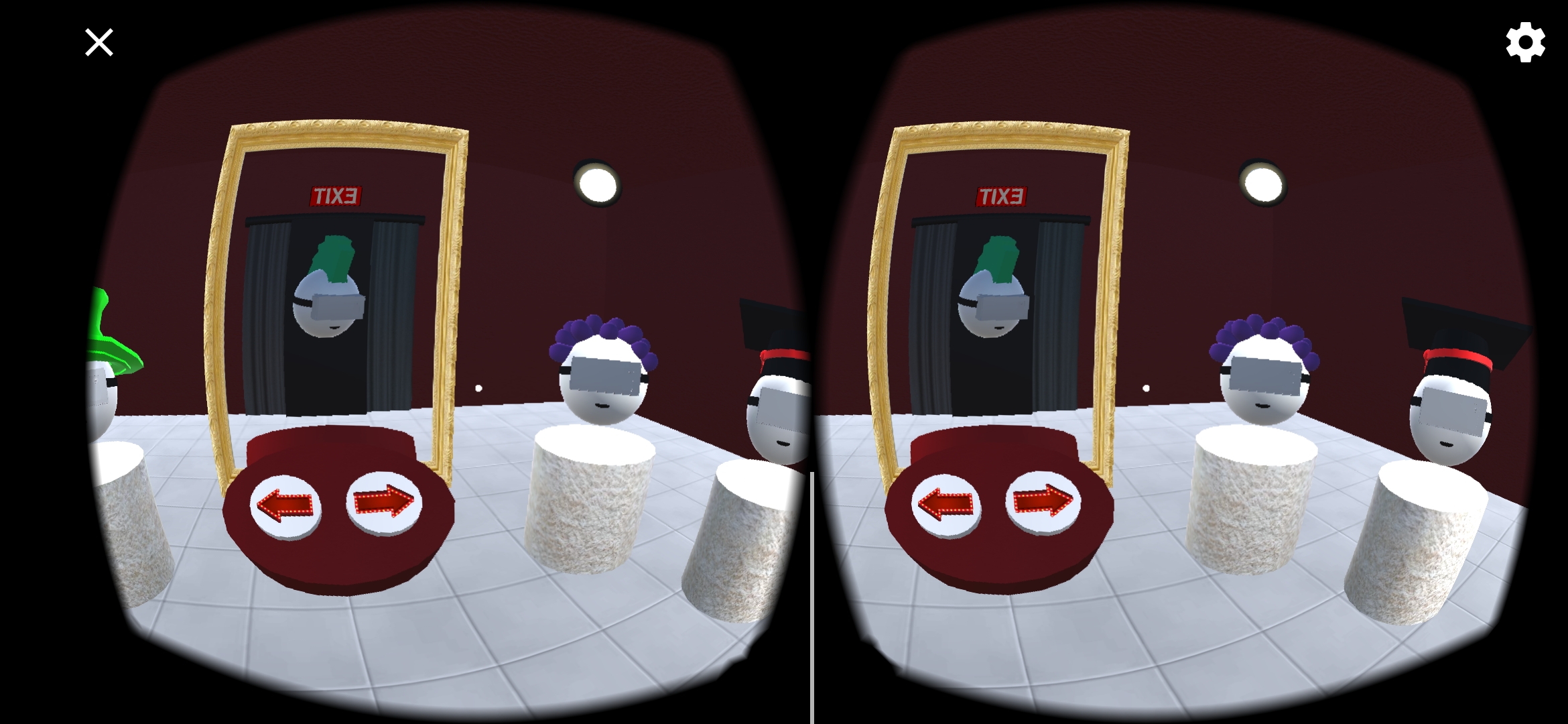

Social MatchUp is a multiplayer Virtual Reality mobile game for children with NDD.

Shared virtual reality environments (SVREs) allow NDD children to interact in the same virtual environment, but without the possible inconvenience caused by having a real person in front of them. The application has been designed in collaboration with psychologist and it’s the result of a partecipatory design process with people with NDD.

The goal of the game is to enhance social and communication skills through a platform in which, in pairs, players will have to join the same images by staring at them; in order to agree on the image to look at they only have a way to communicate: by speaking

Conversational Agents

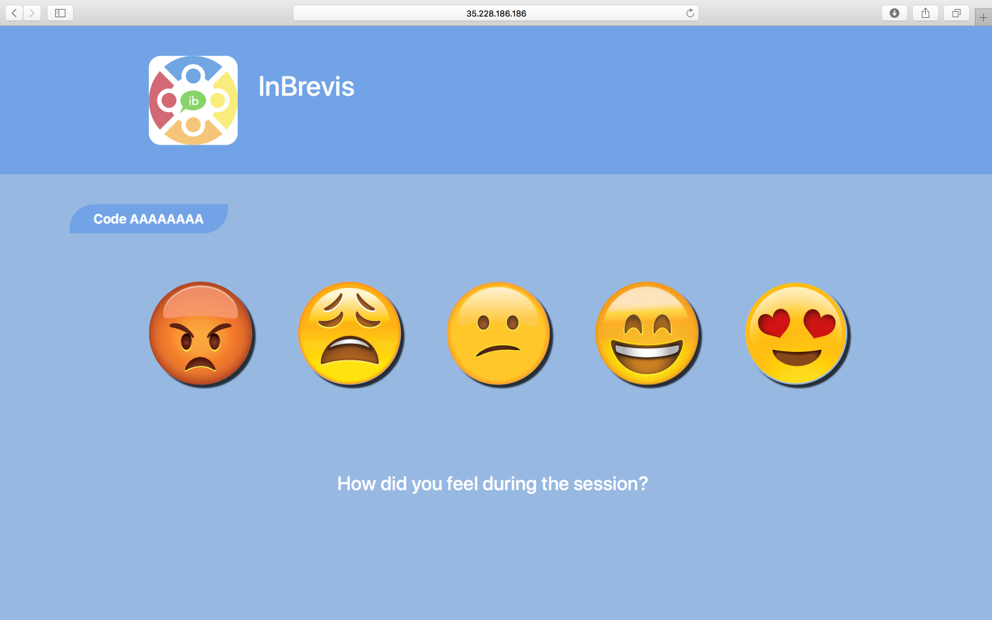

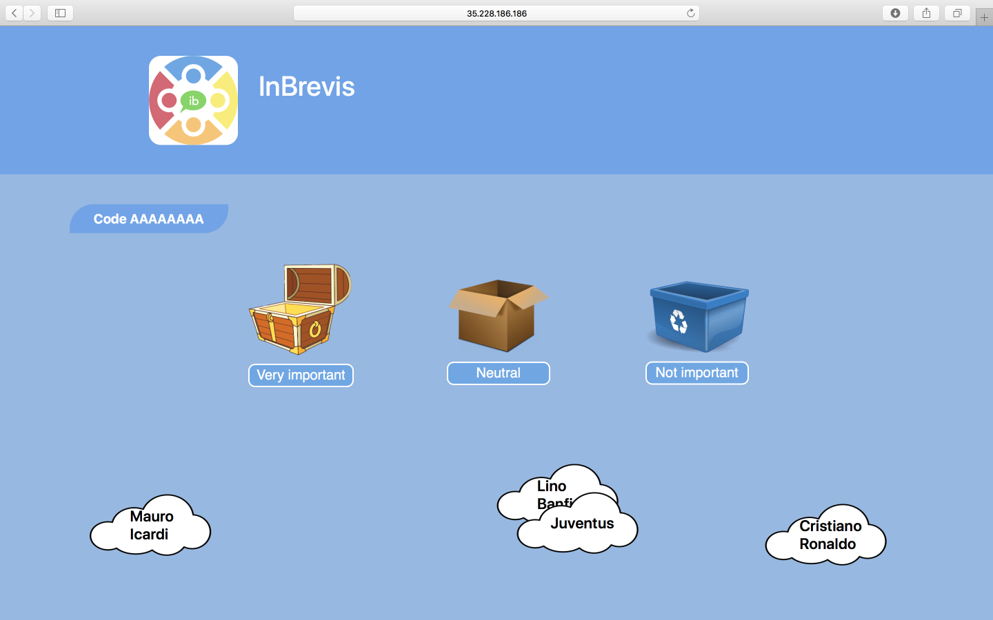

In Brevis

The aim of the project was to develop a system exploiting the power of NLP techniques in order support therapists in the context of group therapy session with patient suffering from neurodevelopmental disorders – NDD. In this kind of session patients speak freely expressing their thoughts and feelings. The main problems highlighted were that it is extremely hard to gather insights on the thoughts of every single patient during group therapy and that often they stop coming to therapy because they feel uncomfortable or uninterested. The developed system takes these problems into account and tries to offer a solution. An iOS application is used by the therapist to manage and record a session as well to extract the content of the conversation using NLP techniques. The extracted topics and some general info about the session are saved into a database. A web application allows patients to interact in a more private way with the content of the session and to express some feedback related to the perceived importance of each topic as well as an emotional feedback about the session as a whole. The therapist on the other hand can visualize in various way the data extracted from the interaction of the patients to develop a better understanding of how they felt

ISI

- it is simple to use and to access (it is thought as a web application)

- it facilitates the communication and trains a progressive self-knowledge

BEEH

- general design guidelines reported in the current literature on socially assistive robots for autistic children;

- lessons learned from our own previous experience on robots for children with NDD;

- feedbacks and suggestions on the progressive prototypes of Puffy offered by a team of 15 therapists (psychologists, neuro-psychiatrists, and special educators) from two different rehabilitation centers who have long-term, everyday experience of NDD subjects (children and adults) and have been collaborating with our research in the last 5 years.

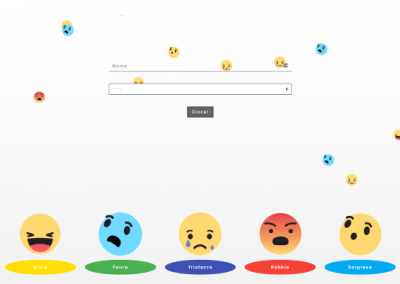

Emoty

Emoty does not act as a virtual assistant for daily life support but aims at helping people with NDD to develop better emotional control and self-awareness, which would lead them to enhance their communication capabilities and consequently to improve their quality of life. Emoty exploits conversational technologies, Machine Learning and Deep Learning techniques for emotion recognition from the text and the voice based on the processing of user’s audio pitch.

Mars

An inclusive and Universal Tool for Children with Language Disorders and Autism.

Communication can be defined as the understanding and exchanging of meaningful messages. The role of communication is central to the lives of human beings as, everyday, we use language to interact with the world around us. Linguistic skills play a fundamental role in this scenario and Language Disorders (LD) are impairments that limit the processing of linguistic information. Early and accurate identification of LD is thus essential to promote lifelong learning and well-being. The MARS project offers a concrete solution to this problem by creating a speech data gathering tool for language-independent identification of developmental language disorders. MARS analyzes the babbling productions of children in typical and atypical populations through a playful activity. The focus of the project is to analyze the audio’s temporal and spectral features reflecting an individual’s prosodic, articulatory, and linguistic vocal characteristics and recurrent patterns corresponding to specific anticipatiory skills. Mars can become an invaluable tool for providing children in need with precious support.

Voxana

Voice-based CAs and chatbots are progressively getting more and more embedded into people’s home: 46% of United States adults uses them in their daily routines. The most famous are Apple’s Siri, Amazon’s Alexa (over 8 million users in January 2017), Google Assistant and IBM conversational services. Facebook alone has 300000 active chatbots powering 8 billion interactions between consumers and businesses every month. On the wave of these facts, Voxana originates from the international collaboration between academic world and industry and sees Politecnico di Milano, TIM, Alfstore, and GroupeSEB as partners, and it is funded by EIT Digital. It is an analytics solution for voice-controlled connected products. VoxAna intends to help marketing people to convert voice interactions into meaningful insights. It makes voice of customers real, by analyzing the voice channel to detect user’s expectations and turn tone into comprehensive insights. More in detail, as consumer brands keep looking to fully understand the user’s context, VoxAna is designed to deliver more personalized and contextualized experiences using Text (Speech to Text) and Sentiment and Emotional (both from content and voice tone) analysis. VoxAna offers a dashboard solution with metrics and insights for voice-controlled product or service to conduct personalized market research.

Smart-Objects

Moovy

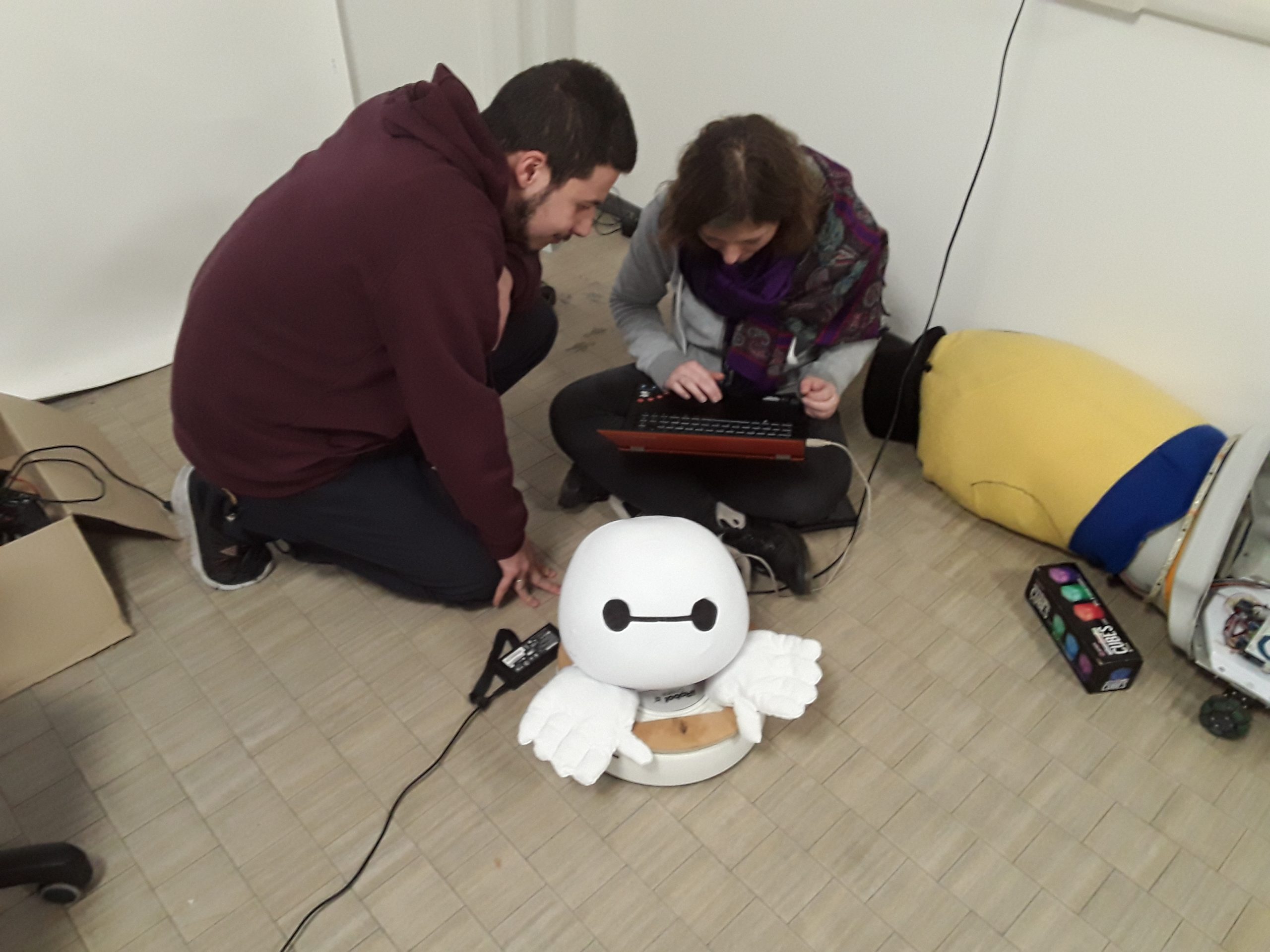

Puffy

- general design guidelines reported in the current literature on socially assistive robots for autistic children;

- lessons learned from our own previous experience on robots for children with NDD;

- feedbacks and suggestions on the progressive prototypes of Puffy offered by a team of 15 therapists (psychologists, neuro-psychiatrists, and special educators) from two different rehabilitation centers who have long-term, everyday experience of NDD subjects (children and adults) and have been collaborating with our research in the last 5 years.

PLET

- “smartify” physical toys and transform them into “e-toys” (i.e., digitally controlled interactive objects)

- connect e-toys with the PLET service management platform, therefore bridging the system embedded in e-toys with the applications for the toy manufacturer.

This technology is the digital core of next generation Smart Toys, being responsible of managing the e-toy interactive behaviour and transmitting (via Wi-Fi) the interaction data to the back end of the PLET service platform in a configurable, robust, and optimised way (e.g., pre-processing some raw data received from e-toy embedded devices such as RFIDs). This technology consists of an embedded platform that includes various hardware components and a software middleware. The embedded platform integrates different technologies, involving commercial sensors and actuators, orchestrated by a powerful and yet low-cost microcontroller, managed by software components that have been designed ad hoc to be as efficient and performant as possible and to maximize the potential of the hardware capabilities embedded in the e-toy.

The document also includes the process performed to achieve the above results, including the following activities:

- selection of the hardware components – commercial sensors and actuators – to be embedded in e-toys;

- integration of the chosen hardware components, in terms of both electronics and software control;

- design of the PCB (printed circuit board), to be produced by an external manufacturer;

- middleware API specification and implementation,

Each of the above activities comprised intense technical testing of the progressive results.

Reflex

Reflex is a mirrored camera mobile training application for persons with Neuro-Developmental Disorder (NDD). The game, offered through a cross-platform application for smart-phones and tablets, bridges the digital and the physical worlds by tracking, via a bottom-looking mirror positioned on the device camera, physical items placed on the table. This interaction paradigm defined as phygital, its co-designed features and the first pilot study reveal an unexplored potential for learning.

We chose to adopt a hybrid approach defined as “phygital” as it digitally virtualizes a physical environment of Tangible Objects (TOs) manipulable by the user. This approach uses a platform which augments a handheld computing device, such as a phone or tablet and utilizes novel computer vision algorithms to sense user interaction with the tangible objects. This technology yields numerous advantages including, but not limited to providing a low-cost alternative for developing a nearly limitless range of applications that blend both physical and digital mediums by reusing existing hardware (e.g., device camera); leveraging novel lightweight detection and recognition algorithms; having low implementation costs; being cross-compatible with existing computing device hardware; operating in real-time to provide for a rich, virtual experience; processing numerous interactions with one or more TOs simultaneously without overwhelming the computing device; recognizing TOs with substantially perfect recall and precision (e.g., 99% and 99.5%, respectively); being capable of adapting to lighting changes and wear and tear of TOs; providing a collaborative tangible experience between users; being natural and intuitive to use; requiring few or no constraints on the types of TOs that can be processed (no specialized markers or symbols are required to be included on the TOs in order for the platform to recognize them